All COVID-19 models are wrong...but some are potentially useful

Update 14-Apr-2020: Since writing this post the discussions of imperfect models has become quite prevalent in scientific circles. I particularly enjoyed this comic published on FiveThirtyEight and this article on case surveillance and testing by Nate Silver.

As I write this, the amount of data and models claiming to assess the impact of COVID-19 on various populations is staggering. Much of this work has not been peer reviewed. In fact, preprint articles (i.e. academic manuscripts that have not undergone peer review) on COVID-19 have eclipsed 750 on just two preprint servers, of which there are many more. As policy is being informed based on speculation, it is important to acknowledge what these models can and cannot do. I am reminded of an oft (mis)quoted adage among epidemiologists and statisticians: all models are wrong, some are useful.

The most widely known model predicting the impact of COVID-19 is the Imperial College article entitled "Impact of non-pharmaceutical interventions (NPIs) to reduce COVID- 19 mortality and healthcare demand" by Ferguson et al. In this model, the authors examine as their primary end point the COVID-19 impact on the healthcare system in Great Britain and the U.S. They tested various interventions in isolation and in concert to assess how policy can mitigate the burden on the healthcare system. As a second outcome, they also examined mortality. There were two methods of response evaluated: a mitigation and suppression. Under mitigation, R0 stays above one. That is, the focus is on slowing the pandemic, but not eliminating it. This is equivalent to the response that the majority of the world is currently undertaking and is often referred to as "flattening the [epidemic] curve". Under suppression, R0 drops below one. The focus is on halting the spread and eradicating it from the population. Suppression requires sustained drastic public health and population-level quarantine measures; measures that are sure to precipitate harmful indirect consequences. This is similar to what was implemented in Wuhan, China.

A nice feature of models such as the one by Ferguson et al. is that they allow a wide range of assumptions to be readily tested and tweaked, creating in essence counterfactual worlds. They are useful only from a "what-if" perspective. That is, what happens to a specific model output if I change around one or more input parameters. These models appear desirable in the sense that they can evaluate a range of potential policy proposal to see which has the greatest impact in the artificial world, and also allow investigators to test the robustness of their assumptions. As the world they are attempting to model is infinitely complex, these models are reduced to only a handful of core constructs and components that the researchers feel are necessary to capture the dynamics of an infectious disease outbreak. For example, Ferguson et al. have captured the mixing of populations in the home, school/work environment, and community in general, treating these in some sense as homogenous units within a given area, aligned to some Census indicators. To provide some level of assurance that the model somewhat reflects a reality, the model can be calibrated to existing data. This demonstrates that the model has accurately described historic data - it says nothing about the future. Unfortunately, one of the prime features of a counterfactual model appears to be absent in the Ferguson et al. paper: precision estimates around the estimates of effectiveness. To ensure that randomness is not a factor in the conclusions, these types of models should stochastically draw from distributions, and run hundreds if not thousands of time. Then, the uncertainty as the model runs can be quantified.

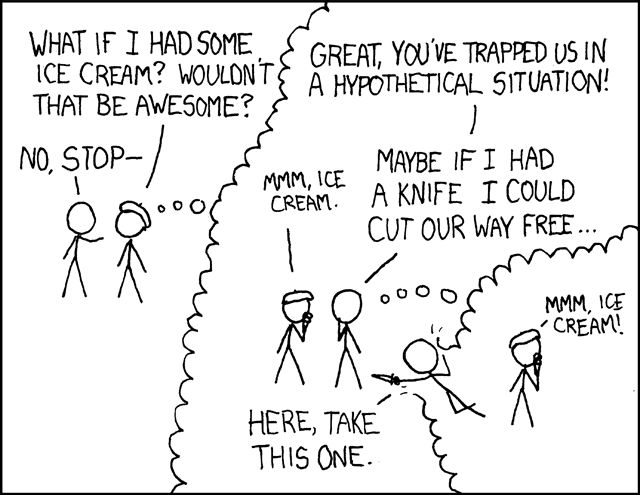

Reprinted from XKCD https://xkcd.com/248/

I would also caution not to become fixated on the absolute quantification of events from this model, as unfortunately has been extensively done in the media. One often quoted passage from the Ferguson et al. paper is that "approximately 510,000 deaths in [Great Britain] and 2.2 million [deaths] in the US" would occur if we do nothing. There are several problems with this claim. First, it is entirely fictious: there is no place that has not responded in some respect, and thus, this reality does not exist. Second, there is no estimate of precision around these estimates (i.e. confidence intervals), so we cannot be sure that this is not just a random finding. Third, the output is extrapolated from the model: past may not predict future. Rather, the utility of these estimates is to provide a comparison, baseline group for estimating the relative impact of various interventions. And that, I believe should be the focus from these types of papers: not the absolute numbers but the relative (%) differences.

There are numerous other models and modelers out there, for example https://covidactnow.org, http://gabgoh.github.io/COVID/index.html, and https://covid19.healthdata.org/projections. One article that has been generating a lot of interest and publicity is entitled The Hammer and the Dance. The Hammer and the dance quotes data from the simulation models. The results from these models as interpreted by others imply a sense of urgency and suggest that policies need to happen immediately, often without consideration of the ancillary impacts. In fact Ferguson et al. acknowledge this by stating "We do not consider the ethical or economic implications of either strategy here" in the Introduction, and further driving home this point in the conclusion "No public health intervention [suppression] with such disruptive effects on society has been previously attempted for such a long duration of time. How populations and societies will respond remains unclear." The Hammer and dance article is clearly advocacy oriented - and not a research - article. A disclaimer by the publishing host, Medium, refers readers to the CDC as the data have not been fact checked. In addition to the article quoting from model output (without acknowledging the important limitations of these models) the authors usefully suggest that basic epidemiological outbreak work can make a substantial difference, as evidenced in South Korea. That is, contact tracing, widespread testing, and isolation and quarantine of those infected or in contact with those infected. All this without strict lock-down procedures. This is the crux of shoe leather outbreak epidemiology and is what we need more of.

Another popular claim is that our response should assume a worst-case perspective: this perspective is inherent in the models that consider suppression. Governments feel that they must act with immediacy based upon flawed models. And yet there is some evidence that waiting can have disastrous consequences on the healthcare system, such as the experience in Italy is suggested. There needs to be a balance between this all or nothing approach, especially when the science is so uncertain, and derived from pure hypothetical worlds. That is not to say these models are without value: they can and should consider the long-standing implications of enacting policy while also considering who it effects and in what ways. For example, consider a policy to close public playgrounds on the premise that this will reduce pathogen transmission between children and adults at the playground, and thus will reduce community infections. This could be easily demonstrated through a simulation model, as we are reducing the number of contacts that an individual has. Now consider the implications of this policy: in urban areas, especially impoverished ones, the only access that children may have to open space and fresh air is a public playground. Further, if there are few or no transportation options available, these children will have no other place to go. Contrast this with a family that has the means to simply find another park or open-air space to go to. This clearly will lead to adverse physical and mental health consequences for these children and their families, as the children now have no place to go. A potential analogy for this is the response to natural disasters. Hurricane Katrina resulted in long term adverse impacts to children. An excellent article to that discusses the potential fallout was recently published in the Atlantic.

And this brings me to the last important point to consider from these models. They are not geared to evaluate real-world policy implications. As these models are gross simplifications of reality constructed in a hypothetical and fictitious world, they operate within a very specific set of boundaries. The indirect impact from the model outputs need to be considered, discussed, and debated before being enacted with experts in infectious disease science. Relatedly, a single model should not inform decisions, even if very convincing on paper. A model in and of itself is a "what if" exercise conducting in a hypothetical world. What is needed is a preponderance of evidence from multiple sources, as any single source can be biased. Models provide one (contrived) source of data. There are now a host of natural and quasi-experiments in populations around the country and world that can provide empiric evidence to supplement the results of a given simulation. Computational models, such as the one by Ferguson et al., provide insight into aspects of infection that are uncertain and need empiric evidence, not substitute for it.

Cite: Goldstein ND. All COVID-19 models are wrong...but some are potentially useful. Mar 25, 2020. DOI: 10.17918/goldsteinepi.